Swayam AgrawalI am a PreDoctoral Researcher at Google DeepMind, in the Machine Learning & Optimization team where I work on Gemini and compute-efficient visual generative models. I am currently working with Prateek Jain, Sujoy Paul, and Aditya Kusupati. I received my Bachelor's degree in Computer Science and Engineering from with Honors from IIIT Hyderabad. As an undergraduate, I was fortunate to work broadly on Robotics, and Computer Vision alongside Prof. Madhava Krishna and Dr. Sourav Garg at the Robotics Research Center. Email / GitHub / Google Scholar / Twitter / LinkedIn |

|

ResearchMy research interests, rooted in the philosophy that the local unit of intelligence is FLOPS, span large model efficiency and elasticity (e.g., sparsity, adaptive compute), as well as representation learning, multimodal systems, and visual generative models. |

Publications |

|

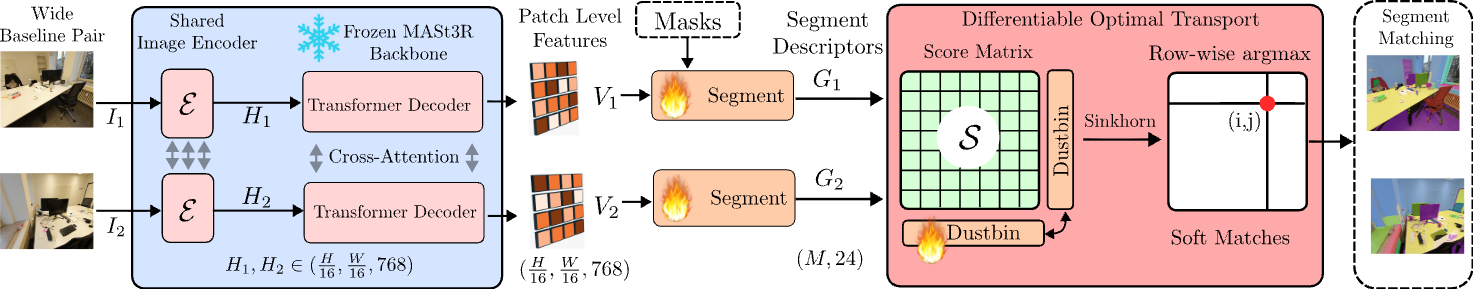

SegMASt3R: Geometry Grounded Segment MatchingRohit Jayanti*, Swayam Agrawal*, Vansh Garg*, Siddharth Tourani, Sourav Garg, et. al. NeurIPS 2025 (Spotlight 🌟) arxiv / code / website / In this work, we establish image segment matching as a benchmark task & we propose a novel model architecture which enables high performance downstream on 3D Instance Mapping & Object-Relative Navigation. Segment matching is an important intermediate task in computer vision that establishes correspondences between semantically or geometrically coherent regions across images. While 2D Foundation models (e.g, DINOv2, SAM2) outperform a 3D foundation model (MASt3R) off-the-shelf for this task, fine-tuning both with a simple segment-matching head alongside SuperGlue style matching results in a surprising trend inversion with SegMASt3R achieving state-of-the-art performance, proving that explicit geometric reasoning is essential. |

|

O3D-SIM: Open-set 3D semantic instance maps for vision language navigationLaksh Nanwani, Kumaraditya Gupta*, Aditya Mathur*, Swayam Agrawal, et. al. Advanced Robotics Journal 2024 arxiv / code / website / In this work, we extend instance-level semantic mapping to 3D. Using foundational models for object recognition, segmentation, and feature extraction, it creates a 3D point cloud with instance-level embeddings that enable language-guided navigation and object queries. The method improves both quantitative task success rates and qualitative instance identification, outperforming closed-set approaches in recognizing unseen objects. |

|

* denotes equal contribution |

|

Design and source code from Jon Barron's website. Jekyll fork from Leonid Keselman. |